Dev Blog - Faking Wind Flow in Interior Spaces

This developer blog post was written by our Principal Technical Artist, Andy Green and was originally shared on his personal blog. It gives an overview of some of the technical challenges that Andy and the team are having to overcome to simulate wind flow in enclosed spaces in Lost Skies.

The problem

There are a number of environmental effects we come to expect in games, and a number of details we take for granted, wind is one of these. It’s such an intuitive feature of our daily lives, we expect it to be present, and just accept it if it feels right. We don’t see wind, but how elements of our world respond to it’s effects; trees swaying, grass flattening in patches, leaves, dust and swirling around us.

In Lost Skies, the wind is a game mechanic as well as a selection of ambient visual effects. It’ll be the driving force behind sail-powered ships, and as the game is set in a largely open sky across floating islands, there will be a lot of it. If a player is standing on the deck of their ship, and the wind is blowing diagonally across their flight path, you’d expect to see scarves, and hair blown in that direction. If you’re parallel to the wind, you’d expect to see the sail is slack and flapping wildly, rather then full.

You’re now in a giant cavern, in one of potentially hundreds of player-generated islands, in a potentially randomly generated world (important technical details for later). There’s a tunnel into the cavern just large enough for a ship to launch from, so being the agent of chaos that you are, you’ve built your ship in there, sails and all.

The island itself has a wind direction as part of a coarse global wind vector field, but you’re inside of a cave. Your ship has sails, you have a campfire at the cave’s mouth, there are plenty of dangly cave flora, and some grass growth at the cave mouth. What does the wind do here?

We know, intuitively, we should expect to see no, or very minimal wind effects deep in the cave. If the wind is blowing into the cave mouth, we’d expect to see some smoke from the fire blown inwards with it’s effects diminishing gradually. But if the wind were to change and no longer blow into the cave, we’d then expect the smoke to rise unaffected by it.

The problem then, with context established, is how do we know in a runtime-friendly way how inside something is, at a given point in space, potentially in the path of wind? We have effects driven by CPU & GPU systems, so which ever method we use has to be accessible to either. Thankfully this data doesn’t need to be overly precise or immediate as the goal is to approximate noisy fluid flow which can’t be directly seen or measured.

The Dynamic Solution

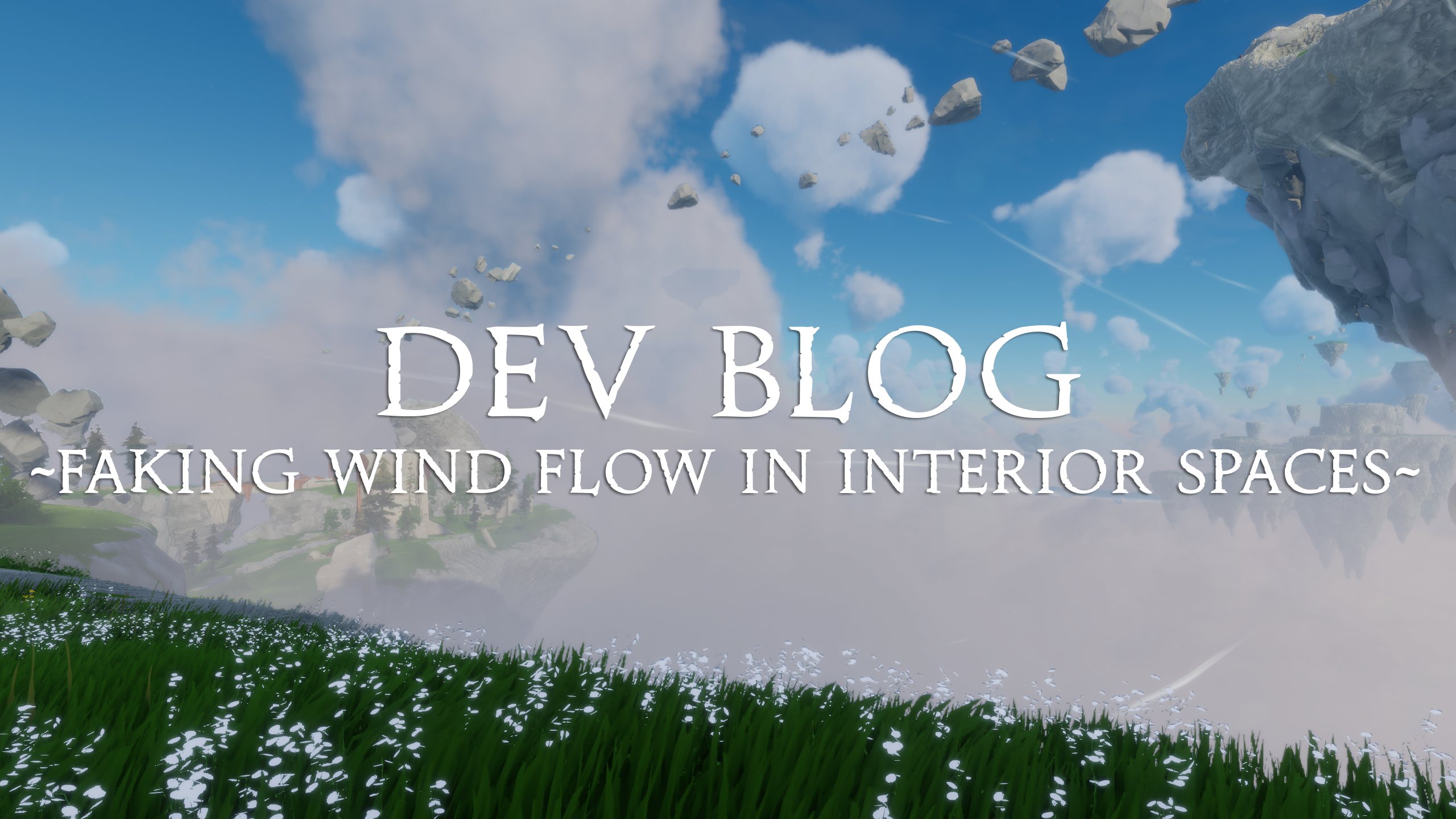

An obvious solution might be to query against the physics scene that’s present. We’d only need to ‘shotgun’ a handful of rays into the wind’s direction and count the number of collisions to get an approximate idea of how occluded something is to the wind. We could do this multiple times for particularly large objects if the need arose, or change the scatter pattern – or both. But we’d need to have quite a long raycast as our interior cavern might be really quite large.

The video clip above shows the “shotgun” effect of raycasts to determine how occluded the player is. Lines from the player represent the rays cast for the occlusion check. The colour of the large sphere gizmo rendering over the player shows occlusion value where black is fully occluded, white is unoccluded.

In isolation, this would likely be enough, and not overly costly performance-wise. We could also amortise this check over several frames, rather then a single ‘blast’, we could constantly perform fewer casts in a random pattern to get a constantly shifting average.

Lets scale up – at how many points in the world will we need to perform this check? 4 players, 4 campfires, 12 sails? The physics cost is starting to climb, but if we kept out collision mask for the check efficient, LOD’d, and load balanced things well it could be manageable.

What about every blade of grass, or in fact any shader-driven effect which needs per-vertex precision? This is where this approach starts to fall down.

We’d need to create a data structure better suited to this kind of lookup, and doing this continually at runtime doesn’t seem viable.

A Baked Solution?

So we bake.

Except we’ve already got a dataset which might serve our purpose, one which is already baked into our island data. And that’s lightprobes, or specifically in our case Unity’s new Adaptive Prove Volumes or APV.

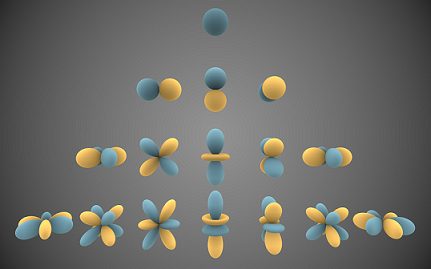

The helpful thing about lightprobe data in this format – specifically the grid layout of the APV – is that at regular points in space, lighting is able to be sampled quickly and cheaply. In this case it’s stored as first order (L1) Spherical Harmonics, which gives us an approximate light intensity and colour value for ambient light, and along each cardinal axis.

Why this is helpful for wind becomes clearer when you consider what this lightprobe data represents, and that’s a ray’s path from any light source, to our probe. This includes light bounces, so we can assume that at a point (and direction) in space, there should be wind if there is light.

You might have considered however, that a desk lamp, or in our case glowing cave flora, would not be a suitable source of wind, which would be correct. For this solution to be viable we’d only want to care about values from our sky. I note ‘sky’ rather than ‘sun’ because we have a day night cycle, and our lighting data is baked using a incredibly bright ambient sky, not the sun at a particular time.

Thankfully, values from our sky are orders of magnitude brighter then any internal light source during the day (using physically based rendering). So one can assume that if a value’s over a threshold, the sky’s lighting reaching our probe, and thus wind would be present.

However at night this becomes a little trickier as the sky intensity drops below that of most interior light sources. If it were just the sky intensity changing, we could just adjust our threshold with the time of day (and scenario blending between lighting data sets) and thus at night require lower brightness values to indicate “insideness”. But unfortunately that glowing mushroom which was at daytime significantly dimmer then our sky, is now the brightest light source for 500m (hypothetically).

We can still maintain a threshold as before, adjusting for time of day, but we discard intensity values over the maximum intensity we’d expect from the sky. This has a potential caveat that certain specifically dim light sources inside might read as windy in this system, but so far this approximation and fudging seems to be an acceptable compromise.

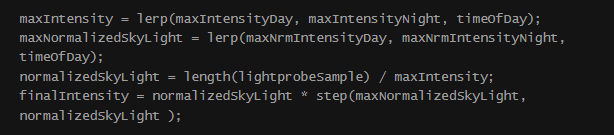

To give this thought some closure, the following is roughly what our sample function would look something like:

This will give us a normalized finalIntensity which we can scale any wind effects by. We set our intensity values based on the time of day, and then normalise our light intensity based on this. Finally we zero the final intensity if our normalised intensity exceeds what we’d defined as the maximum. Ideally our max would be 1, and the normalized range would be a true 0-1 value, but in my current implementation, I’ve not been overly scientific about defining or measuring values, so it is definitely somewhat a fudge at present.

Also on re-writing this as pseudo code here, I’m now asking why I didn’t wait until after I’d discarded overly bright values before normalizing, however I don’t anticipate the net result differs greatly.

So we have our data, and because it’s part of a system Unity and HDRP already fully utilise, it’s something we can begin sampling in our shaders immediately. In theory, ShaderGraph already provides the ability to sample this data, but because of the following section, I’ve created a custom HLSL helper for this sample to ensure uniformity in out system.

Async GPU Readback

I mentioned CPU and GPU availability of this data earlier, and I’d not forgotten.

APV probe (or brick) data is encoded to 3D textures, and thus easily available on the GPU (with existing Unity-written sampling functions usable), but it’s however not directly available to the CPU. You can get references to the textures objects, but not sample the RenderTextures, so we’ll need a system to do this.

This header’s title will now make sense, we’re going to have to read these textures on the GPU, and request this data back to the CPU. Those with experience here will know that reading data back off of the GPU can be slow, >100ms slow. This is because our rendering pipeline is optimised for sending data one way in a “fire and forget” fashion, and to retrieve data from the GPU requires the CPU to wait for the GPU to hit a sync point before the data is available.

The benefits of AsyncGPUReadback are that we avoid the CPU stall, at the cost of latency in getting the data we need. It’s worth pointing out that there’s still a GPU cost in terms of bandwidth and processing to this readback, one which I’ve yet to measure. If it turns out this GPU cost is too great, the potential solution for now might be to limit this feature to faster graphics cards. This in mind, the APV data is already uploaded to the GPU, and already in constant use.

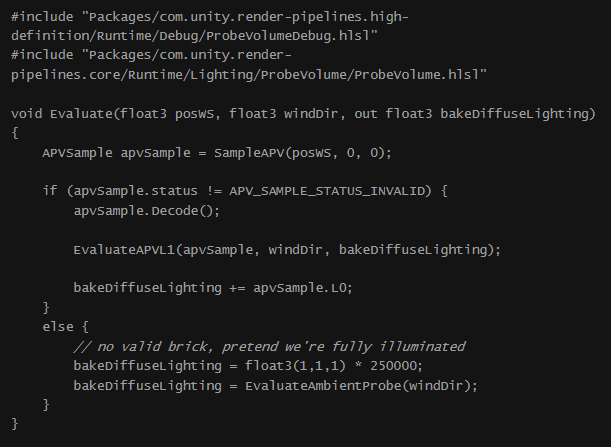

The way of returning this APV data to the CPU will be via a simple compute shader which samples the lightprobes in the same fashion as in a shader. The following is the apv sample function, borrowed from Unity’s own source, with some modifications. I’ve made some minor changes to it so the same function can be used in our pixel shader and compute shader.

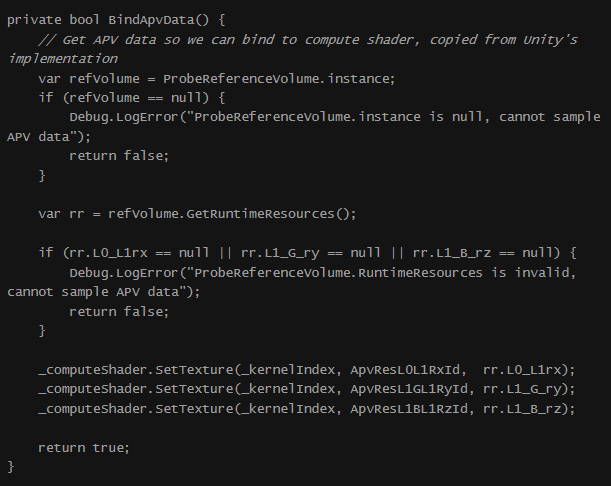

The wrinkle here is that Unity handles compute shader dispatches differently, and data bound to “global” shader variables in the rasterization pipeline is not accessible. It’s necessary manually bind the lighting data to our compute shader but thankfully there’s an accessible API to read this, ProbeReferenceVolume.GetRuntimeResources().

In the above method, we get the light probe coefficients from Unity’s own ProbeReferenceVolume class. Worth noting here, that as of HDRP 14, this data is the current result of the probe data loaded and blended, and not the entirety of the probe data. Had I been able to just sample the “day” data, it would have been all-together simpler.

Finally, to address the latency in this approach. Given the nature of what these values represent, that wind takes time to propagate, and is inherently noisy and chaotic, the observer won’t appreciate a 3-4 frame delay in updates to this representation.

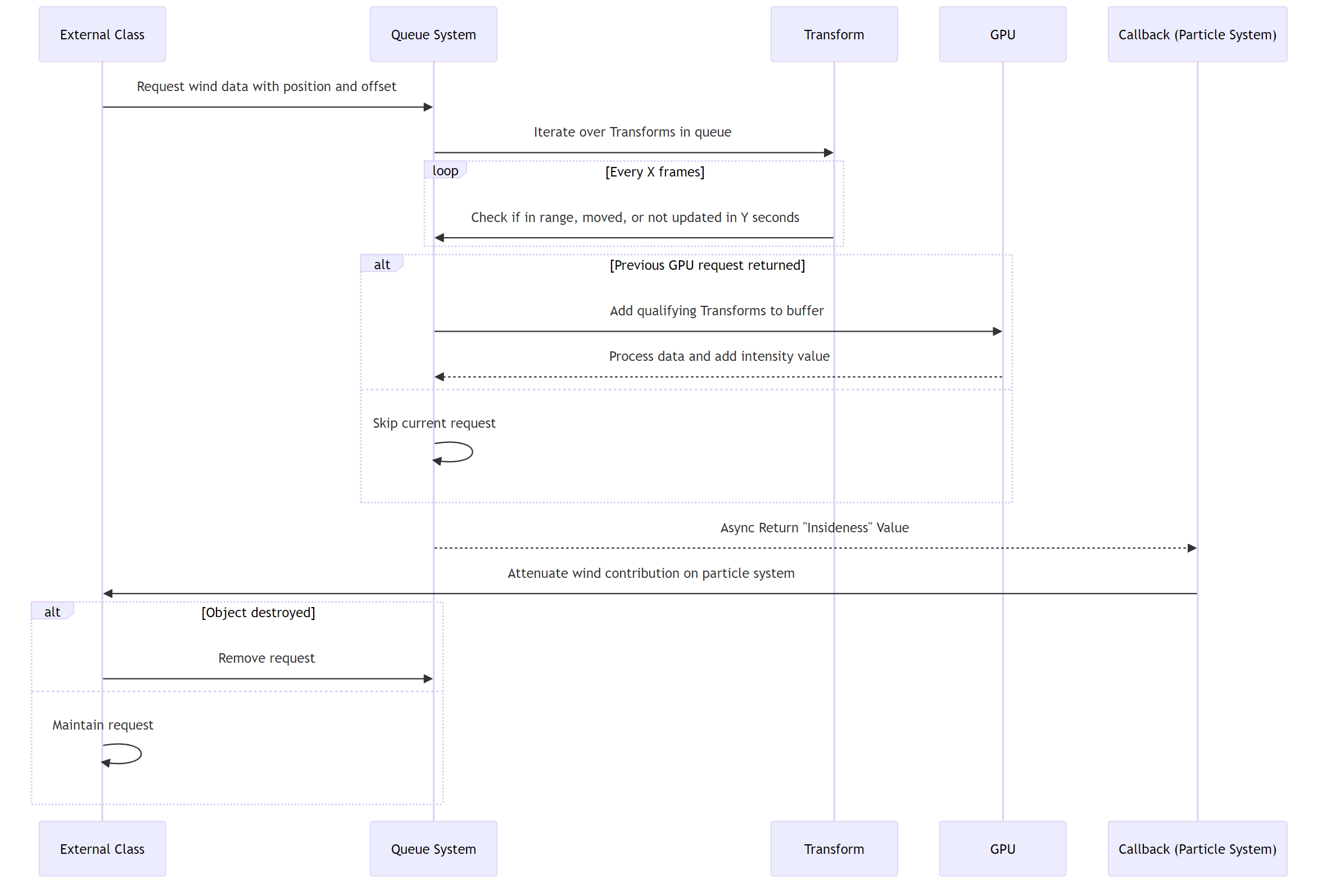

This async functionality does however require a more comprehensive implementation, and the system sampling this data needs to account for that. So in this endeavour, I’ve implemented a queue system which lets external classes request wind from a Transform‘s position (with offset) in space, and needs to supply a callback. This system then supplies the “insideness” value to the callback once the request has returned from the GPU.

The system begins a request every x frames, and will iterate over all Transforms in its queue. Transforms which are in range, have moved a significant amount, or have not been updated within y seconds will then be added into our buffer to be sent to the GPU, other Transforms will be ignored this time.

The request will only be sent to the GPU if the previous has returned, otherwise we risk writing over the results of the previous request, as the compute shader when dealing with the request, will add it’s intensity value into this buffer, before it’s read back.

When this data arrives back to the CPU, the async callback is called, and each request is iterated over, and then sent back to the original caller. We now have a way of supplying any assets in the game an approximation of how much contribution our global wind system should have over it on our islands.

Caveats & Limitations

Would be remis of me to leave it here suggesting this approach was done and compromise free. Lets knock me down a few pegs and explore what’s perhaps an issue.

GPU Load

As mentioned above, I’ve not currently explored exactly what the GPU load is with the async readback call. CPU load of this system is negligible, with the only potential issue being the memory allocation to the callback with every async request.

If it turns out GPU load is an issue, aside from reducing frequency of the calls, or number of requests per call, I sadly don’t see a solution. As I mentioned above, if this proves too costly, it’ll mean this might need to become an optional quality setting, and not something which can be relied on by gameplay logic.

Latency

I’m not particularly concerned about the latency here, but it’s still a drawback. The situation this latency might be noticed will be when instantiating sails in caves to begin with, and seeing them have a few frames under a wind value. Defaulting to full wind is an issue in caves, however defaulting to no wind could be a problem if our system never successfully fulfils a request – which is possible. a 50% wind occlusion might be a satisfactory compromise as it could prevet a sudden and short “pop” when the sail changes state.

Alternatively, a future improvement for the CPU system is smoothing the returned “insideness” value so there are no abrupt changes, thus adding a further delay.

Only As Good As the APV Data

Like a lot of light baking solutions, APV makes a trade off between speed and quality. The goal of performing an computation of lighting data “offline”, is to perform the heavy calculations once, and save to disk, so the runtime cost is much lower.

With APV there are occasions where light probes close to geometry which evaluate darker then you’d expect, likely because they’ve failed a “validity check”, and are thus not used. This might lead to slightly darker spots at places in your lighting data. You’ll also have intensity stepping along corridors which do not run parallel to the APV’s grid layout (aliasing) which might on occasion lead to odd results.

When you can manually author environments to specifically ease these lighting issues, or even add in corrective volumes, as APV allows, these compromises work out very minor. However when your lightbake is an automated process as part of a larger pipeline of potentially 100s of islands, you’ve a much harder massaging the data into a better shape.

Whilst APV for this task seems fairly robust so far, any issues or artifacts we experience with lighting data will also be felt in our “insideness” checks, even if they are less impactful.

Dim Night Lights

I briefly mentioned this above, but it’s a compromise with the night aspect of this system, interior lights which are of a similar intensity to the “sky” would be regarded as exterior. I’ve yet to notice this, although give the size of our islands and world, it’ll take some exploration to do so.

What about on Ships?

We currently don’t have a pipeline for baking in lighting data for ships as they are not static, thus ships would not serve occlude wind. We might have to fallback to a raycast solution here.

So what do I have now?

I have debug views of this “insidness” value changing with lightprobes and being mostly stable through time of day, but very little in the game utilises this so far, currently only sails and dynamic grass. I’ll need to follow up with a series of more practical examples of this in action. Naturally the merit of this approach will be determined significantly by the results. However, the journey’s still interesting, and there’s a number of concepts and techniques described during this process which remain valuable regardless of output.

Through this process I’ve been able to explore lightprobes and some core mathematical concepts which drive them, Unity’s APV volumes in more specific detail, and got to grips with async GPU readbacks and their required CPU systems.

HDRP’s APV system was implemented into our island creator and baking process by the father of the Island Creator, Tom Jackson (he gave some early insight into some of his exploits back on the original Island Creator for Worlds Adrift here).

As I continue to experiment with light probes, Adaptive Probe Volumes, and wind, the journey is as valuable as the destination. Each step not only adds a layer of realism to Lost Skies but also helps me develop my skills as a developer and Technical Artist. It’s these intricate details which audiences often take for granted, but add to the feel of a convincing and compelling world. I look forward to showing off more soon.